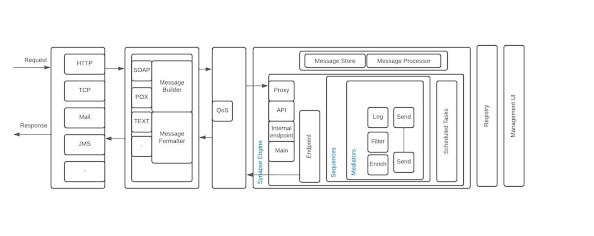

Messaging Architecture

Day to day collection from work and self learning in QA, middleware, tech support spaces ..

Monday, June 13, 2022

Back in WSO2 ..❤️

It's been more than 5 years since I've last blogged here! Also, that's almost the same length of time that I was away. Away from WSO2.

During the last 5+ years I was working for an organization in the telecommunication domain. With them, I joined to head the Production support to WSO2 based product platform they had and then gradually picked up, Delivery, Product management and finally headed a product department. Not only that I was moved across 3 company logos within the same group!! I guess it explains the dynamics. Entirely a roller-coaster ride which was made okay by certain lead levels and dear teams I engaged with during the journey.

This June, I joined WSO2 again. Much thankful to Sanjiva, Customer Success leadership for accepting me.

Today's post is all to say how grateful it feels to join again.

Onboarding program at WSO2 simply re-confirms how well you would be looked after.

Initial interfacing by the HR teams to collect my data, personalized couriering of the brand new Apple MacBook Pro and broad welcome greet followed by the amazing onboarding orientation. There was so much attention by WSO2 to all the details. Things like EPF form was sent home with an ink pad and filled form to be collected by the company arranged currier. Comprehensive hands-on session with digi-ops to set up the laptop, mail, apps, etc, so you can operate as everyone else from the day 1 itself! Each of the department joined the induction program to tell new employees about what they do how we can engage. I am sure each batch must be feeling very special and totally ready by the time they are through this program.

Induction hands you over to your lead who discusses their planned programs with necessary buddy support etc.

Yeap.. That was my first 4 days after re-joining!

Looking forward to serve at my best and enjoy every min of it!

Saturday, June 27, 2015

Patch Management System - developed, maintained, enhanced using WSO2 Carbon products

WSo2 support patching in brief

WSO2 support system issues patches to fix defects that are found in product setups. This patching procedure needs to be methodically handled as the fix that you are applying should correctly solve the issue, it should not introduce regression, it should be committed to relevant public code base, it should be committed to support environment and validated that it correctly fit in with existing code base.Patch Management Tool (PMT)

In an environment where you have large customer base and expects many clarifications daily, there are chances of developers missing certain best practices. Therefore a process that forces and reminds you of what need to be done while the process should be embedded to the system. WSO2 Patch Management Tool, PMT was built for above purpose.In the above process, we also need to maintain a database of patch information. In WSO2 support system, applying a patch to a server involves a method which ensures that a new fix is correctly applied to the product binaries. This patch applying procedure mainly relies on a numbering system. This means every patch has its own number. Since we support maintenance for many platform versions at the same time, there are parallel numbering systems for each WSO2 Carbon platform version. All above data should be correctly archived and should be available as an index when you need to create a new patch. PMT facilitates this by being the hub for all patches. Any developer who creates a new patch obtains the patch number for his work from PMT.

WSO2 support system involves many developers working parallel in different or same product components, based on the customer issues they are handling. In such space, it is important to have meta data such as the jar versions that are patched, commit revisions, patch location, integration test cases, integration test revisions, developer/QA who worked on the patch, patch released date to be archived. These information are needed at a time of a conflict or an error. PMT facilitates an environment to capture these information.

So as described in above the main purposes of PMT are managing patching process, generating patch numbers, archiving patch metadata and providing search facility. This was initially introduced and designed by Samisa as a pet project for which Lasindu, Pulasthi helped with writing extensions as part of their intern projects and Yumani with QAing.

To cater the project requirements, WSO2 Governance Registry was chosen as the back end server with WSO2 user base connected via WSO2 IS as the user store and MySQL for registry. Later WSO2 User Engagement Server was integrated as the presentation layer, using Jaggery framework to develop the presentation logic. From WSO2 G-Reg, we are using the RXT, LifeCycle and handler concepts, search/ filtering facilities and governance API. From WSO2 UES we are using webapp hosting capability. WSO2 IS is LDAP user store.

In the proceeding sections I will briefly describe how PMT evolved with different product versions, absorbing new features and enhancements.

First version in wso2greg-4.5.1

Our requirement was to capture data on JIRA's basic information, client/s to whom its issued, people involved, dates, related documentation and repositories. So the RXT was categorized into tables as Overview, People Information, JIRA Information, Patch Information, Test Information and Dates.

<content>

<table name="Overview">

</table>

<table name="People Involved">

</table>

<table name="Patch Information">

</table>

</table>

<table name="Dates">

</content>

Each above <table> has the attributes related to them. Most of these were captured via <field type="options"> or <field type="text">

Above RXT (patchRXT) was associated to a Lifecycle to manage the patching process. Patch LifeCycle involves main stages such as Development, ReadyForQA, Testing, Release. Each above state includes a set of check list items, which lists the tasks that a developer or QA needs to following while in a particular Lifecycle state.

Sample code segment below shows the configuration of the 'testing' state:

<state id="Testing">

<datamodel>

<data name="checkItems">

<item name="Verified patch zip file format" forEvent="Promote">

</item>

<item name="Verified README" forEvent="Promote">

</item>

<item name="Verified EULA" forEvent="Promote">

</item>

<item name="Reviewed the automated tests provided for the patch">

</item>

<item name="Reviewed info given on public/support commits" forEvent="Promote">

</item>

<item name="Verified the existance of previous patches in the test environment" forEvent="Promote">

</item>

<item name="Auotmated tests framework run on test environment">

</item>

<item name="Checked md5 checksum for jars for hosted and tested" forEvent="Promote">

</item>

<item name="Patch was signed" forEvent="Promote">

</item>

<item name="JIRA was marked resolved" forEvent="Promote">

</item>

</data>

<data name="transitionUI">

<ui forEvent="Promote" href="../patch/Jira_tanstionUI_ajaxprocessor.jsp"/>

<ui forEvent="ReleasedNotInPublicSVN" href="../patch/Jira_tanstionUI_ajaxprocessor.jsp"/>

<ui forEvent="ReleasedNoTestsProvided" href="../patch/Jira_tanstionUI_ajaxprocessor.jsp"/>

<ui forEvent="Demote" href="../patch/Jira_tanstionUI_ajaxprocessor.jsp"/>

</data>

</datamodel>

<transition event="Promote" target="Released"/>

<transition event="ReleasedNotInPublicSVN" target="ReleasedNotInPublicSVN"/>

<transition event="ReleasedNoTestsProvided" target="ReleasedNoTestsProvided"/>

<transition event="Demote" target="FailedQA"/>

</state>

As you may have noticed the Lifecycle transitionUI in which user is given an additional interface in between state changes. In above, on completion of all check list items of the testing state and at the state transition, it pauses to log the time that was spent on the tasks. This information will directly update the live support JIRA and is used for billing purposes. The ui-handlers were used to generate this transition UI dynamically. Later in the cycle wee removed it when Jagath and Parapran introduced a new time logging web application.

The various paths that the patch might need to be parked given the environment factors, we had to introduce different intermediate states such as 'ReleasedNotInPublicSVN', 'ReleasedNoTestsProvided'. <transition event> tag was helpful in this. For example a patch can be promoted to 'released', or 'ReleasedNotInPublicSVN' or 'ReleasedNoTestsProvided' states or it can be demoted to FailedQA' states using <transition event> option.

Performance Issues in G-Reg 4.5.1

Migrated to G-Reg 4.5.3

There was also RXT related enhancements, where we could now have a pop-up calender for dates opposed to the text box in the previous version.Filtering; In the earlier version patch filtering was done through a custom development which we had deployed in CARBON_HOME/repository/component/lib. But in the new version this was an inbuilt feature. There were also enhancements in G-Reg's governance API that we could laverage in generating reports and patch numbers.

Moving historical data to live environment.

This added 900+ patches to the live system and also effected performance as below. This was done using data service to get data from spreadsheets and a java client to add them to PMT [attached].

Looking for new avenues

Scalability:

After checking into details, leading G-Reg engineers Ajith, Shelan proposed some changes to data-sources configuration which helped.

Introduced 'removeAbandoned' and 'removeAbandonedTimeout':

<removeAbandoned="true"

removeAbandonedTimeout="<This value should be more than the longest possible running transaction>"

logAbandoned="true">

Updated 'maxActive' to a higher value than the default value of 80.

<maxActive>80</maxActive>

But we had couple of more problems, we noticed that it the response time for listing the patches is unreasanably high. Some of the reasons that were isolated with the help of Ajith was that the default setting for versioning which was set to true. In G-reg Lifecycle related data used to be stored storing as properties, so when <versioningProperties>true</versioningProperties> is set, it massively grows the REG_RESOURCE_PROPERTY and REG_PROPERTY tables with the resource updates.The patch LifeCycle (contains 20 check list items) , when one patch resource going through all the LC states, it is adding more than 1100 records to REG_RESOURCE_PROPERTY and REG_PROPERTY when versioning is set to true.

Summary of Ajith's tests;

Adding LC : 22

One click on check list item : 43

One promote or demote: 71.

Versoning off:

Adding LC : 22

One click on check list item : 00

One promote or demote: 24.

As a solution following were done;

1) Created a script to delete the unwanted properties

2) Disabled the versioning properties, comments and Ratings, from static configurations in registry.xml [2 - https://wso2.org/jira/browse/REGISTRY-1610]

<staticConfiguration>

<versioningProperties>false</versioningProperties>

<versioningComments>false</versioningComments>

<versioningTags>true</versioningTags>

<versioningRatings>false</versioningRatings>

<servicePath>/trunk/services/</servicePath>

</staticConfiguration>

There was also another bug found related to artifact listing. There is a background task in G-Reg to cache all the generic artifacts. That help us to reduce the database calls when we need to retrieve the artifacts(get from cache instead of database).In G-Reg 4.5.3 it doesn't work for the custom artifacts such as patchRXT and it only works for service,policy,wsdl and schema.This issue was patched by the GReg team.

Process Validation:

Jaggery based web application and GReg upgrade

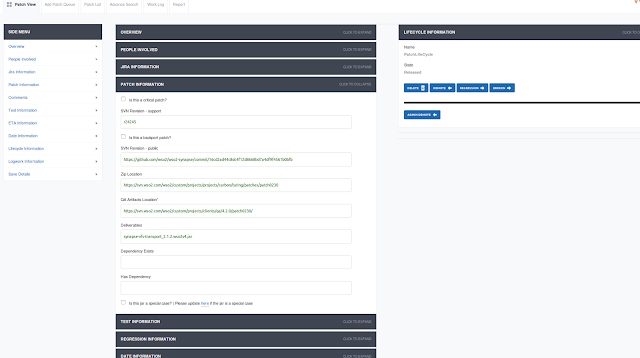

The intention of the new PMT application was to provide a very user friendly view and to assure the developers of the patches follow all necessary steps in creating a patch. For example a developer cannot proceed from the 'development' state if he has not updated the support JIRA, public JIRA, svn revision fields. If he selects to say automation testing is 'Not possible' he has to give reasons. If he has done tests, he has to give the test commit location etc. Yes, we had occasional disturbed use cases. Special thanks to Paraparan and Ashan who fixed these cases with no time.

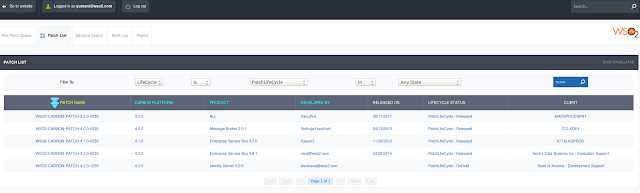

Image 1: Patch Listing

Image 2: Patch Details

This application was highly appreciated by the users as it allowed auto generation of patch numbers, pre-populated data for customer projects, users, products and versions etc. As promised it duly validates all mandatory inputs by creating a direct bind to user activities.

Today, the application has been further developed to capture various patch categories such as ported patches, critical patches, preQA patches. GReg based life cycle is also further developed now to cator pre Patch creation and post patch creation tasks. It was Inshaf who implemented these enhancement. We are also working on new features such as updating customers with the ETAs for patches based on three point estimation, looping leads and rigorously following-up in delays.

The application was also deployed in an HA setup by Chamara, Inshaf and Ashan for higher scalability, where the back-end GReg is clustered to 4 nodes fronted by Nginx which is sitting between the client application and the BE. We have about 100+ engineers accessing this application daily with around 15 concurrency for adding patches to patch queue, generating patch numbers, progressing patches through patch life cycle and searching for meta information.

Additionally the PMT database is routinely queried for several other client programs such Patch Health clients, where we generate reports on patches which are not process complete, Service Pack generation where the whole of database is read to extract patches belonging to a given product version and its kernal, Reports for QA at the time of the release testing where they seek for use cases patched by the customer. Most of these client applications are written using governance API.

PMT is a good example of a simple use case which expanded to a full scale system. It is much used in WSO2 support today and we extended it to have many new features to support the increasing support demands. We have been able to leverage Jaggery framework to all UI level enhancements. Governance registry's ability of defining any type of governance asset and customizable life cycle management feature pioneered the inception of this tool and helped in catering the different data patterns that we wanted to preserve and life cycle changes that needed to be added later according to the changes in the process. We were able to add customized UIs to the application with the use of handler concept in GReg. When the front end jaggery web application was introduced, we had a very seamless integration since the back-end could be accessed via governance API. Increasing load demands were well supported by Carbon clustering and high availability concepts.

Thursday, June 18, 2015

hazelcast.max.no.heartbeat.seconds property

The optimum value for this property would depend on your system.

Steps on how to configure the hazelcast.max.no.heartbeat.seconds.

- Create a property file called hazelcast.properties, and add the following properties to it. hazelcast.max.no.master.confirmation.seconds=45

- Place this file in CARBON_HOME/repository/conf/ folder of all your Carbon nodes.

- Restart the servers.

Monday, April 7, 2014

What happens to HTTP transport when service level security is enabled in carbon 4.2.0

In carbon 4.2.0 products for example WSO2 AS 5.2.0, when you apply security, the HTTP endpoint disables and disappears from service dashboard as well.

|

| Service Dashboad |

|

| wsdl1.1 |

In earlier carbon versions this did not happen, both endpoints use to still appear even if you have enabled security.

Knowing this I tried accessing HTTP endpoint and when failed tried;

- restarting server,

- dis-engaging security

but neither help.

The reason being; this is not a bug, but is as design. The HTTP transport disables when you enable security and to activate it again you need to enable HTTPS from service level transport settings.

|

| Transport management view - HTTP disabled |

Change above as this;

|

| Transport management view - HTTP enabled |

Sunday, April 6, 2014

Fine Grained Authorization scenario

A request coming from the client will be authenticated at WSO2 ESB proxy, which acts as a XACML PEP and authorizes the request to access the back-end service by processing the request at WSO2 IS which acts as the XACML PDP.

So the actors in the scenario are;

PEP - Policy Enforcement Point - WSO2 ESB

PDP - Policy Decision Point - WSO2 IS

BE - echo service in WSO2AS

client - SoapUI

Let's try step by step:

1. Configure Entitlement proxy (ESB-4.8.0)

a) Create a custom proxy, giving echo service as the wsdl;

WSDL URI - http://localhost:9765/services/echo?wsdl

b) In-Sequence

- Select Entitlement mediator and add entitlement information

Entitlement Server - https://localhost:9444/services/

Username - admin

Password - admin

Entitlement Callback Handler - UT

Entitlement Service Client Type - SOAP - Basic Auth

- Add results sequences for OnAccept and OnReject nodes.

OnReject as below;

OnAccept as below - send mediator to BE service;

c) OutSequence

-Add a send mediator

My complete proxy service is built like this;

<?xml version="1.0" encoding="UTF-8"?> <proxy xmlns="http://ws.apache.org/ns/synapse" name="EntitlementProxy" transports="https" statistics="disable" trace="disable" startOnLoad="true"> <target> <inSequence> <entitlementService remoteServiceUrl="https://localhost:9444/services/" remoteServiceUserName="admin" remoteServicePassword="enc:kuv2MubUUveMyv6GeHrXr9il59ajJIqUI4eoYHcgGKf/BBFOWn96NTjJQI+wYbWjKW6r79S7L7ZzgYeWx7DlGbff5X3pBN2Gh9yV0BHP1E93QtFqR7uTWi141Tr7V7ZwScwNqJbiNoV+vyLbsqKJE7T3nP8Ih9Y6omygbcLcHzg=" callbackClass="org.wso2.carbon.identity.entitlement.mediator.callback.UTEntitlementCallbackHandler" client="basicAuth"> <onReject> <makefault version="soap11"> <code xmlns:soap11Env="http://schemas.xmlsoap.org/soap/envelope/" value="soap11Env:VersionMismatch"/> <reason value="Wrong Value"/> <role/> </makefault> </onReject> <onAccept> <send> <endpoint> <address uri="https://localhost:9445/services/echo"/> </endpoint> </send> </onAccept> <obligations/> <advice/> </entitlementService> </inSequence> <outSequence> <send/> </outSequence> <faultSequence> <send/> </faultSequence> </target> <publishWSDL uri="http://localhost:9765/services/echo?wsdl"/> <enableSec/> <policy key="conf:/repository/axis2/service-groups/EntitlementProxy/services/EntitlementProxy/policies/UTOverTransport"/> <description/> </proxy>

2) Start the back-end service.

In my scenario it is the echo service in WSO2AS-5.2.0

https://192.168.1.3:9445/services/echo/

3) Configure XACML Policy using IS-4.5.0

a) Go to Policy Administration > Add New Entitlement Policy > Simple Policy Editor

b) Give a name to the policy and fill in other required data.

This policy is based on - Resource

Resource which is equals to -{echo} ---> wild card entry for BE service name.

Action - read

<Policy xmlns="urn:oasis:names:tc:xacml:3.0:core:schema:wd-17" PolicyId="TestPolicy" RuleCombiningAlgId="urn:oasis:names:tc:xacml:1.0:rule-combining-algorithm:first-applicable" Version="1.0"> <Target> <AnyOf> <AllOf> <Match MatchId="urn:oasis:names:tc:xacml:1.0:function:string-regexp-match"> <AttributeValue DataType="http://www.w3.org/2001/XMLSchema#string">echo</AttributeValue> <AttributeDesignator AttributeId="urn:oasis:names:tc:xacml:1.0:resource:resource-id" Category="urn:oasis:names:tc:xacml:3.0:attribute-category:resource" DataType="http://www.w3.org/2001/XMLSchema#string" MustBePresent="true"/> </Match> </AllOf> </AnyOf> </Target> <Rule Effect="Permit" RuleId="Rule-1"> <Target> <AnyOf> <AllOf> <Match MatchId="urn:oasis:names:tc:xacml:1.0:function:string-equal"> <AttributeValue DataType="http://www.w3.org/2001/XMLSchema#string">read</AttributeValue> <AttributeDesignator AttributeId="urn:oasis:names:tc:xacml:1.0:action:action-id" Category="urn:oasis:names:tc:xacml:3.0:attribute-category:action" DataType="http://www.w3.org/2001/XMLSchema#string" MustBePresent="true"/> </Match> </AllOf> </AnyOf> </Target> <Condition> <Apply FunctionId="urn:oasis:names:tc:xacml:1.0:function:any-of"> <Function FunctionId="urn:oasis:names:tc:xacml:1.0:function:string-equal"/> <AttributeValue DataType="http://www.w3.org/2001/XMLSchema#string">admin</AttributeValue> <AttributeDesignator AttributeId="urn:oasis:names:tc:xacml:1.0:subject:subject-id" Category="urn:oasis:names:tc:xacml:1.0:subject-category:access-subject" DataType="http://www.w3.org/2001/XMLSchema#string" MustBePresent="true"/> </Apply> </Condition> </Rule> <Rule Effect="Deny" RuleId="Deny-Rule"/> </Policy>

c) After creating the policy, Click 'Publish To My PDP' link.

d) Go to 'Policy View' and press 'Enable'

e) To validate the policy, create a request and tryit. Click on the 'TryIt' link of the policy (in the 'Policy Administration' page) and give request information as below;

<Request xmlns="urn:oasis:names:tc:xacml:3.0:core:schema:wd-17" CombinedDecision="false" ReturnPolicyIdList="false">

<Attributes Category="urn:oasis:names:tc:xacml:3.0:attribute-category:action">

<Attribute AttributeId="urn:oasis:names:tc:xacml:1.0:action:action-id" IncludeInResult="false">

<AttributeValue DataType="http://www.w3.org/2001/XMLSchema#string">read</AttributeValue>

</Attribute>

</Attributes>

<Attributes Category="urn:oasis:names:tc:xacml:1.0:subject-category:access-subject">

<Attribute AttributeId="urn:oasis:names:tc:xacml:1.0:subject:subject-id" IncludeInResult="false">

<AttributeValue DataType="http://www.w3.org/2001/XMLSchema#string">admin</AttributeValue>

</Attribute>

</Attributes>

<Attributes Category="urn:oasis:names:tc:xacml:3.0:attribute-category:resource">

<Attribute AttributeId="urn:oasis:names:tc:xacml:1.0:resource:resource-id" IncludeInResult="false">

<AttributeValue DataType="http://www.w3.org/2001/XMLSchema#string">{echo}</AttributeValue>

</Attribute>

</Attributes>

</Request>

4) Send a request from client

a) Launch SoapUI and create a project using echo service wsdl.

- Add username/password in request properties

- Set the proxy url as the endpoint URL

- Send the request

NOTE:

Enable DEBUG logs in PDP and view the request and response as below;

a) Open IS_HOME/repository/conf/log4j.properties

b) Add following line

log4j.logger.org.wso2.carbon.identity.entitlement=DEBUG

c) View IS logs as below;

Saturday, April 5, 2014

Using operations scope to hold my values while iterating

With lot of help from IsuruU, I managed to workout this solution. In here I am Iterating through this message [a], and breaking it to sub messages using this [b] pattern. I need to collect the values of '//m0:symbol' in a property and send the processed values to client and values of failed messages to failure sequence.

[a] - Request message

<soapenv:Envelope xmlns:soapenv="http://schemas.xmlsoap.org/soap/envelope/">

<soapenv:Body>

<m0:getQuote xmlns:m0="http://services.samples">

<m0:request>

<m0:symbol>IBM</m0:symbol>

</m0:request>

<m0:request>

<m0:symbol>WSO2</m0:symbol>

</m0:request>

<m0:request>

<m0:symbol>AAA</m0:symbol>

</m0:request>

<m0:request>

<m0:symbol>SUN</m0:symbol>

</m0:request>

</m0:getQuote>

</soapenv:Body>

</soapenv:Envelope>

[b] xpath expression//m0:getQuote/m0:request"

Here is my insquence:

<inSequence>

<iterate xmlns:m0="http://services.samples"

continueParent="true"

preservePayload="true"

attachPath="//m0:getQuote"

expression="//m0:getQuote/m0:request"

sequential="true">

<target>

<sequence>

<property name="PID"

expression="fn:concat(get-property('operation','PID'),//m0:symbol,' ')"

scope="operation"

type="STRING"/>

<store messageStore="pid_store"/>

</sequence>

</target>

</iterate>

<log level="custom">

<property name="Processed_PIDs" expression="get-property('operation','PID')"/>

</log>

<payloadFactory media-type="xml">

<format>

<ax21:getQuoteResponse xmlns:ax21="http://services.samples/xsd">

<ax21:pid>$1</ax21:pid>

</ax21:getQuoteResponse>

</format>

<args>

<arg xmlns:ns="http://org.apache.synapse/xsd"

evaluator="xml"

expression="get-property('operation','PID')"/>

</args>

</payloadFactory>

<respond/>

</inSequence>

Let me explain above; With 'expression="//m0:getQuote/m0:request"' the request message will be split into different messages as I described earlier. Since my scenario was to collect a given value from each of the split message and send them to appropriate path as a single message, I have used continueParent="true" and sequential="true". By this I am making sequential processing instead of default parallel processing behaviour of iterator.

Then as a target sequence within iterator to mediate the split message, I have opened a property mediator. Using this, I am collecting the value of //m0:symbol and storing it in a variable (property name) 'PID'.

The scope of the PID property was set to scope=operations to preserve the property within iterated message flow.

Later, as per the initial requirement the message is sent to a message store. A log is printed on property for ease of tracking. Then I prepared a payload to send the message with PID as an attribute.

Fault sequence was done like this to capture faulty messages and pass their PIDs.

<faultSequence>

<log level="full">

<property name="MESSAGE"

value="--------Executing default "fault" sequence--------"/>

<property name="ERROR_CODE" expression="get-property('ERROR_CODE')"/>

<property name="ERROR_MESSAGE" expression="get-property('ERROR_MESSAGE')"/>

</log>

<property xmlns:m0="http://services.samples"

name="PID"

expression="fn:substring(fn:concat(get-property('operation','PID'),//m0:symbol,' '),1,(fn:string-length(get-property('operation','PID'))-1))"

scope="operation"

type="STRING"/>

<log level="custom">

<property name="Failed_PIDs" expression="get-property('operation','PID')"/>

</log>

<payloadFactory media-type="xml">

<format>

<ax21:getQuoteResponse xmlns:ax21="http://services.samples/xsd">

<ax21:pid>$1</ax21:pid>

</ax21:getQuoteResponse>

</format>

<args>

<arg xmlns:ns="http://org.apache.synapse/xsd"

evaluator="xml"

expression="get-property('operation','PID')"/>

</args>

</payloadFactory>

<respond/>

</faultSequence>

The complete proxy configuration can be found here. TCPMon outputs are attached below for further clarity.

Friday, February 14, 2014

EnableSecureVault in API Manager 1.6.0

1. Change element <EnableSecureVault> in <APIM_HOME>/repository/conf/api-manager.xml to true.

<EnableSecureVault>true</EnableSecureVault>

2. Update synapse.properties file in <APIM_HOME>/repository/conf with following synapse property. synapse.xpath.func.extensions=org.wso2.carbon.mediation.security.vault.xpath.SecureVaultLookupXPathFunctionProvider.

3. Run the cipher tool available in <APIM_HOME>/bin to create secret repositories.

#ciphertool.sh -Dconfigure.

3. In api configuration <APIM_HOME>/repository/deployment/ server/synaps-config, replace;

<property name="Authorization" expression="fn:concat('Basic ', base64Encode('admin:admin'))" scope="transport"/>

property in the api's with;

<property name="password" expression="wso2:vault-lookup('secured.endpoint.password')"/>

For example: I have an api called 'shoppingCart' created by admin.

So I need to change above entries in repository/deployment/server/synapse-configs/default/api/admin--shoppingCart_v1.0.0.xml

4. When starting the server; it will prompt you to enter keystore password.

Thats all. Above changes need to be done on Gateway node.

Wednesday, February 5, 2014

RhinoEngine} java.lang.reflect.InvocationTargetException when acessing APIM-store -- was my fault

This has been due to an unclosed tag in store/repository/conf/api-manager.xml.

[1]

TID: [0] [AM] [2014-02-06 10:41:49,048] ERROR {org.jaggeryjs.scriptengine.engine.RhinoEngine} - {org.jaggeryjs.scriptengine.engine.RhinoEngine}

java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:525)

at org.mozilla.javascript.ScriptableObject.buildClassCtor(ScriptableObject.java:1048)

at org.mozilla.javascript.ScriptableObject.defineClass(ScriptableObject.java:989)

at org.mozilla.javascript.ScriptableObject.defineClass(ScriptableObject.java:923)

at org.jaggeryjs.scriptengine.engine.RhinoEngine.defineClass(RhinoEngine.java:331)

at org.jaggeryjs.scriptengine.engine.RhinoEngine.exposeModule(RhinoEngine.java:349)

at org.jaggeryjs.scriptengine.engine.RhinoEngine.getRuntimeScope(RhinoEngine.java:265)

at org.jaggeryjs.jaggery.core.manager.CommonManager.initContext(CommonManager.java:71)

at org.jaggeryjs.jaggery.core.manager.WebAppManager.initContext(WebAppManager.java:242)

at org.jaggeryjs.jaggery.core.manager.WebAppManager.execute(WebAppManager.java:266)

at org.jaggeryjs.jaggery.core.JaggeryServlet.doGet(JaggeryServlet.java:24)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:735)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:848)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:305)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:210)

at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:222)

at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:123)

at org.apache.catalina.authenticator.AuthenticatorBase.invoke(AuthenticatorBase.java:472)

at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:171)

at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:99)

at org.wso2.carbon.tomcat.ext.valves.CompositeValve.invoke(CompositeValve.java:177)

at org.wso2.carbon.tomcat.ext.valves.CarbonStuckThreadDetectionValve.invoke(CarbonStuckThreadDetectionValve.java:161)

at org.apache.catalina.valves.AccessLogValve.invoke(AccessLogValve.java:936)

at org.wso2.carbon.tomcat.ext.valves.CarbonContextCreatorValve.invoke(CarbonContextCreatorValve.java:57)

at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:118)

at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:407)

at org.apache.coyote.http11.AbstractHttp11Processor.process(AbstractHttp11Processor.java:1004)

at org.apache.coyote.AbstractProtocol$AbstractConnectionHandler.process(AbstractProtocol.java:589)

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.run(NioEndpoint.java:1653)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

at java.lang.Thread.run(Thread.java:722)

Caused by: java.lang.NullPointerException

at org.wso2.carbon.apimgt.impl.dao.ApiMgtDAO.<init>(ApiMgtDAO.java:87)

at org.wso2.carbon.apimgt.impl.AbstractAPIManager.<init>(AbstractAPIManager.java:68)

at org.wso2.carbon.apimgt.impl.APIConsumerImpl.<init>(APIConsumerImpl.java:81)

at org.wso2.carbon.apimgt.impl.UserAwareAPIConsumer.<init>(UserAwareAPIConsumer.java:43)

at org.wso2.carbon.apimgt.impl.APIManagerFactory.newConsumer(APIManagerFactory.java:56)

at org.wso2.carbon.apimgt.impl.APIManagerFactory.getAPIConsumer(APIManagerFactory.java:89)

at org.wso2.carbon.apimgt.impl.APIManagerFactory.getAPIConsumer(APIManagerFactory.java:77)

at org.wso2.carbon.apimgt.hostobjects.APIStoreHostObject.<init>(APIStoreHostObject.java:96)

... 35 more

TID: [0] [AM] [2014-02-06 10:41:49,665] ERROR {org.jaggeryjs.scriptengine.engine.RhinoEngine} - Error while registering the hostobject : org.wso2.carbon.apimgt.hostobjects.APIStoreHostObject {org.jaggeryjs.scriptengine.engine.RhinoEngine}

java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:525)

at org.mozilla.javascript.ScriptableObject.buildClassCtor(ScriptableObject.java:1048)

at org.mozilla.javascript.ScriptableObject.defineClass(ScriptableObject.java:989)

at org.mozilla.javascript.ScriptableObject.defineClass(ScriptableObject.java:923)

at org.jaggeryjs.scriptengine.engine.RhinoEngine.defineHostObject(RhinoEngine.java:69)

at org.jaggeryjs.jaggery.core.manager.CommonManager.exposeModule(CommonManager.java:238)

at org.jaggeryjs.jaggery.core.manager.CommonManager.require(CommonManager.java:232)

at org.jaggeryjs.jaggery.core.manager.WebAppManager.require(WebAppManager.java:218)

at sun.reflect.GeneratedMethodAccessor47.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:601)

at org.mozilla.javascript.MemberBox.invoke(MemberBox.java:160)

at org.mozilla.javascript.FunctionObject.call(FunctionObject.java:411)

at org.mozilla.javascript.optimizer.OptRuntime.callName(OptRuntime.java:97)

at org.jaggeryjs.rhino.store.modules.manager.c1._c8(/store/modules/manager/manager.jag:38)

at org.jaggeryjs.rhino.store.modules.manager.c1.call(/store/modules/manager/manager.jag)

at org.mozilla.javascript.ScriptRuntime.applyOrCall(ScriptRuntime.java:2347)

at org.mozilla.javascript.BaseFunction.execIdCall(BaseFunction.java:272)

at org.mozilla.javascript.IdFunctionObject.call(IdFunctionObject.java:127)

at org.mozilla.javascript.optimizer.OptRuntime.call2(OptRuntime.java:76)

at org.jaggeryjs.rhino.store.modules.manager.c0._c6(/store/modules/manager/module.jag:22)

at org.jaggeryjs.rhino.store.modules.manager.c0.call(/store/modules/manager/module.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callProp0(OptRuntime.java:119)

at org.jaggeryjs.rhino.store.modules.api.c1._c1(/store/modules/api/recently-added.jag:3)

at org.jaggeryjs.rhino.store.modules.api.c1.call(/store/modules/api/recently-added.jag)

at org.mozilla.javascript.ScriptRuntime.applyOrCall(ScriptRuntime.java:2347)

at org.mozilla.javascript.BaseFunction.execIdCall(BaseFunction.java:272)

at org.mozilla.javascript.IdFunctionObject.call(IdFunctionObject.java:127)

at org.mozilla.javascript.optimizer.OptRuntime.call2(OptRuntime.java:76)

at org.jaggeryjs.rhino.store.modules.api.c0._c4(/store/modules/api/module.jag:16)

at org.jaggeryjs.rhino.store.modules.api.c0.call(/store/modules/api/module.jag)

at org.mozilla.javascript.optimizer.OptRuntime.call2(OptRuntime.java:76)

at org.jaggeryjs.rhino.store.site.blocks.api.recently_added.c0._c2(/store/site/blocks/api/recently-added/block.jag:10)

at org.jaggeryjs.rhino.store.site.blocks.api.recently_added.c0.call(/store/site/blocks/api/recently-added/block.jag)

at org.mozilla.javascript.optimizer.OptRuntime.call1(OptRuntime.java:66)

at org.jaggeryjs.rhino.store.jagg.c0._c26(/store/jagg/jagg.jag:198)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callName(OptRuntime.java:97)

at org.jaggeryjs.rhino.store.jagg.c0._c27(/store/jagg/jagg.jag:258)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callName(OptRuntime.java:97)

at org.jaggeryjs.rhino.store.jagg.c0._c26(/store/jagg/jagg.jag:210)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callName(OptRuntime.java:97)

at org.jaggeryjs.rhino.store.jagg.c0._c27(/store/jagg/jagg.jag:258)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callName(OptRuntime.java:97)

at org.jaggeryjs.rhino.store.jagg.c0._c26(/store/jagg/jagg.jag:193)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callName(OptRuntime.java:97)

at org.jaggeryjs.rhino.store.jagg.c0._c38(/store/jagg/jagg.jag:423)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.call1(OptRuntime.java:66)

at org.jaggeryjs.rhino.store.site.pages.c0._c1(/store/site/pages/list-apis.jag:13)

at org.jaggeryjs.rhino.store.site.pages.c0.call(/store/site/pages/list-apis.jag)

at org.mozilla.javascript.optimizer.OptRuntime.call0(OptRuntime.java:57)

at org.jaggeryjs.rhino.store.site.pages.c0._c0(/store/site/pages/list-apis.jag:10)

at org.jaggeryjs.rhino.store.site.pages.c0.call(/store/site/pages/list-apis.jag)

at org.mozilla.javascript.ContextFactory.doTopCall(ContextFactory.java:401)

at org.mozilla.javascript.ScriptRuntime.doTopCall(ScriptRuntime.java:3003)

at org.jaggeryjs.rhino.store.site.pages.c0.call(/store/site/pages/list-apis.jag)

at org.jaggeryjs.rhino.store.site.pages.c0.exec(/store/site/pages/list-apis.jag)

at org.jaggeryjs.scriptengine.engine.RhinoEngine.execScript(RhinoEngine.java:441)

at org.jaggeryjs.scriptengine.engine.RhinoEngine.exec(RhinoEngine.java:191)

at org.jaggeryjs.jaggery.core.manager.WebAppManager.execute(WebAppManager.java:269)

at org.jaggeryjs.jaggery.core.JaggeryServlet.doGet(JaggeryServlet.java:24)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:735)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:848)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:305)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:210)

at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:222)

at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:123)

at org.apache.catalina.authenticator.AuthenticatorBase.invoke(AuthenticatorBase.java:472)

at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:171)

at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:99)

at org.wso2.carbon.tomcat.ext.valves.CompositeValve.invoke(CompositeValve.java:177)

at org.wso2.carbon.tomcat.ext.valves.CarbonStuckThreadDetectionValve.invoke(CarbonStuckThreadDetectionValve.java:161)

at org.apache.catalina.valves.AccessLogValve.invoke(AccessLogValve.java:936)

at org.wso2.carbon.tomcat.ext.valves.CarbonContextCreatorValve.invoke(CarbonContextCreatorValve.java:57)

at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:118)

at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:407)

at org.apache.coyote.http11.AbstractHttp11Processor.process(AbstractHttp11Processor.java:1004)

at org.apache.coyote.AbstractProtocol$AbstractConnectionHandler.process(AbstractProtocol.java:589)

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.run(NioEndpoint.java:1653)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

at java.lang.Thread.run(Thread.java:722)

Caused by: java.lang.NullPointerException

at org.wso2.carbon.apimgt.impl.dao.ApiMgtDAO.<init>(ApiMgtDAO.java:87)

at org.wso2.carbon.apimgt.impl.AbstractAPIManager.<init>(AbstractAPIManager.java:68)

at org.wso2.carbon.apimgt.impl.APIConsumerImpl.<init>(APIConsumerImpl.java:81)

at org.wso2.carbon.apimgt.impl.UserAwareAPIConsumer.<init>(UserAwareAPIConsumer.java:43)

at org.wso2.carbon.apimgt.impl.APIManagerFactory.newConsumer(APIManagerFactory.java:56)

at org.wso2.carbon.apimgt.impl.APIManagerFactory.getAPIConsumer(APIManagerFactory.java:89)

at org.wso2.carbon.apimgt.impl.APIManagerFactory.getAPIConsumer(APIManagerFactory.java:77)

at org.wso2.carbon.apimgt.hostobjects.APIStoreHostObject.<init>(APIStoreHostObject.java:96)

... 90 more

TID: [0] [AM] [2014-02-06 10:41:49,673] ERROR {org.jaggeryjs.scriptengine.engine.RhinoEngine} - org.mozilla.javascript.EcmaError: TypeError: org.mozilla.javascript.Undefined@7c93ca84 is not a function, it is undefined. (/store/modules/manager/manager.jag#39) {org.jaggeryjs.scriptengine.engine.RhinoEngine}

TID: [0] [AM] [2014-02-06 10:41:49,673] ERROR {org.jaggeryjs.jaggery.core.manager.WebAppManager} - org.mozilla.javascript.EcmaError: TypeError: org.mozilla.javascript.Undefined@7c93ca84 is not a function, it is undefined. (/store/modules/manager/manager.jag#39) {org.jaggeryjs.jaggery.core.manager.WebAppManager}

org.jaggeryjs.scriptengine.exceptions.ScriptException: org.mozilla.javascript.EcmaError: TypeError: org.mozilla.javascript.Undefined@7c93ca84 is not a function, it is undefined. (/store/modules/manager/manager.jag#39)

at org.jaggeryjs.scriptengine.engine.RhinoEngine.execScript(RhinoEngine.java:446)

at org.jaggeryjs.scriptengine.engine.RhinoEngine.exec(RhinoEngine.java:191)

at org.jaggeryjs.jaggery.core.manager.WebAppManager.execute(WebAppManager.java:269)

at org.jaggeryjs.jaggery.core.JaggeryServlet.doGet(JaggeryServlet.java:24)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:735)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:848)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:305)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:210)

at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:222)

at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:123)

at org.apache.catalina.authenticator.AuthenticatorBase.invoke(AuthenticatorBase.java:472)

at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:171)

at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:99)

at org.wso2.carbon.tomcat.ext.valves.CompositeValve.invoke(CompositeValve.java:177)

at org.wso2.carbon.tomcat.ext.valves.CarbonStuckThreadDetectionValve.invoke(CarbonStuckThreadDetectionValve.java:161)

at org.apache.catalina.valves.AccessLogValve.invoke(AccessLogValve.java:936)

at org.wso2.carbon.tomcat.ext.valves.CarbonContextCreatorValve.invoke(CarbonContextCreatorValve.java:57)

at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:118)

at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:407)

at org.apache.coyote.http11.AbstractHttp11Processor.process(AbstractHttp11Processor.java:1004)

at org.apache.coyote.AbstractProtocol$AbstractConnectionHandler.process(AbstractProtocol.java:589)

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.run(NioEndpoint.java:1653)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)

at java.lang.Thread.run(Thread.java:722)

Caused by: org.mozilla.javascript.EcmaError: TypeError: org.mozilla.javascript.Undefined@7c93ca84 is not a function, it is undefined. (/store/modules/manager/manager.jag#39)

at org.mozilla.javascript.ScriptRuntime.constructError(ScriptRuntime.java:3557)

at org.mozilla.javascript.ScriptRuntime.constructError(ScriptRuntime.java:3535)

at org.mozilla.javascript.ScriptRuntime.typeError(ScriptRuntime.java:3563)

at org.mozilla.javascript.ScriptRuntime.typeError2(ScriptRuntime.java:3582)

at org.mozilla.javascript.ScriptRuntime.notFunctionError(ScriptRuntime.java:3637)

at org.mozilla.javascript.ScriptRuntime.notFunctionError(ScriptRuntime.java:3625)

at org.mozilla.javascript.ScriptRuntime.newObject(ScriptRuntime.java:2263)

at org.jaggeryjs.rhino.store.modules.manager.c1._c8(/store/modules/manager/manager.jag:39)

at org.jaggeryjs.rhino.store.modules.manager.c1.call(/store/modules/manager/manager.jag)

at org.mozilla.javascript.ScriptRuntime.applyOrCall(ScriptRuntime.java:2347)

at org.mozilla.javascript.BaseFunction.execIdCall(BaseFunction.java:272)

at org.mozilla.javascript.IdFunctionObject.call(IdFunctionObject.java:127)

at org.mozilla.javascript.optimizer.OptRuntime.call2(OptRuntime.java:76)

at org.jaggeryjs.rhino.store.modules.manager.c0._c6(/store/modules/manager/module.jag:22)

at org.jaggeryjs.rhino.store.modules.manager.c0.call(/store/modules/manager/module.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callProp0(OptRuntime.java:119)

at org.jaggeryjs.rhino.store.modules.api.c1._c1(/store/modules/api/recently-added.jag:3)

at org.jaggeryjs.rhino.store.modules.api.c1.call(/store/modules/api/recently-added.jag)

at org.mozilla.javascript.ScriptRuntime.applyOrCall(ScriptRuntime.java:2347)

at org.mozilla.javascript.BaseFunction.execIdCall(BaseFunction.java:272)

at org.mozilla.javascript.IdFunctionObject.call(IdFunctionObject.java:127)

at org.mozilla.javascript.optimizer.OptRuntime.call2(OptRuntime.java:76)

at org.jaggeryjs.rhino.store.modules.api.c0._c4(/store/modules/api/module.jag:16)

at org.jaggeryjs.rhino.store.modules.api.c0.call(/store/modules/api/module.jag)

at org.mozilla.javascript.optimizer.OptRuntime.call2(OptRuntime.java:76)

at org.jaggeryjs.rhino.store.site.blocks.api.recently_added.c0._c2(/store/site/blocks/api/recently-added/block.jag:10)

at org.jaggeryjs.rhino.store.site.blocks.api.recently_added.c0.call(/store/site/blocks/api/recently-added/block.jag)

at org.mozilla.javascript.optimizer.OptRuntime.call1(OptRuntime.java:66)

at org.jaggeryjs.rhino.store.jagg.c0._c26(/store/jagg/jagg.jag:198)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callName(OptRuntime.java:97)

at org.jaggeryjs.rhino.store.jagg.c0._c27(/store/jagg/jagg.jag:258)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callName(OptRuntime.java:97)

at org.jaggeryjs.rhino.store.jagg.c0._c26(/store/jagg/jagg.jag:210)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callName(OptRuntime.java:97)

at org.jaggeryjs.rhino.store.jagg.c0._c27(/store/jagg/jagg.jag:258)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callName(OptRuntime.java:97)

at org.jaggeryjs.rhino.store.jagg.c0._c26(/store/jagg/jagg.jag:193)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.callName(OptRuntime.java:97)

at org.jaggeryjs.rhino.store.jagg.c0._c38(/store/jagg/jagg.jag:423)

at org.jaggeryjs.rhino.store.jagg.c0.call(/store/jagg/jagg.jag)

at org.mozilla.javascript.optimizer.OptRuntime.call1(OptRuntime.java:66)

at org.jaggeryjs.rhino.store.site.pages.c0._c1(/store/site/pages/list-apis.jag:13)

at org.jaggeryjs.rhino.store.site.pages.c0.call(/store/site/pages/list-apis.jag)

at org.mozilla.javascript.optimizer.OptRuntime.call0(OptRuntime.java:57)

at org.jaggeryjs.rhino.store.site.pages.c0._c0(/store/site/pages/list-apis.jag:10)

at org.jaggeryjs.rhino.store.site.pages.c0.call(/store/site/pages/list-apis.jag)

at org.mozilla.javascript.ContextFactory.doTopCall(ContextFactory.java:401)

at org.mozilla.javascript.ScriptRuntime.doTopCall(ScriptRuntime.java:3003)

at org.jaggeryjs.rhino.store.site.pages.c0.call(/store/site/pages/list-apis.jag)

at org.jaggeryjs.rhino.store.site.pages.c0.exec(/store/site/pages/list-apis.jag)

at org.jaggeryjs.scriptengine.engine.RhinoEngine.execScript(RhinoEngine.java:441)

... 24 more

Wednesday, January 22, 2014

API Manager 1.6.0 - tanancy

Came across a question on API Manager tenancy ...

As per docs [1]

"WSO2 API Manager supports creating multiple tenants and managing APIs in a tenant-isolated manner. When you create multiple tenants in an API Manager deployment, the API Stores of each tenant will be displayed in a muti-tenanted view for all users to browse and permitted users to subscribe to. "

Let's try it and see !!

Step 1 - Create a tenant

- Login to management console - https://localhost:9443/carbon/

- Create a new tenant from Home > Configure > Multitenancy > Add New Tenant - lets say my tenant is yumani.com.

- Now if you check in API Store, you will see tenant domains there.

- Login to management console, using tenant admin's credentials

- Create a new user role from Home > Configure > Users and Roles > Roles

- name - creator

- permissions - as given in [2]

- Create a role for subscriber

- name -subscriber

- permissions - login, Manage -> API ->Subscribe [3]

- Create a role for publisher

- name -publisher

- permissions -login, Manage -> API ->Publish [4]

- Create 3 users and assign them to each role.

- Login to 'API Publisher' from creator's account and create a new API.

Step 4 - View the API from store

- Now login to API Store using subscriber's account

- You will see the above API.

[3] - http://docs.wso2.org/display/AM160/User+Roles+in+the+API+Manager#UserRolesintheAPIManager-Thedefault subscriber role

[4] - http://docs.wso2.org/display/AM160/User+Roles+in+the+API+Manager#UserRolesintheAPIManager-Addingthe publisher role

Featured

Selenium - Page Object Model and Action Methods

How we change this code to PageObjectModel and action classes. 1 2 3 driver . findElement ( By . id ( "userEmail" )). sendKeys (...

Popular Posts

-

These days I am involved in testing a migration tool which demands in testing the application's migration against several databases. In ...

-

Came across this error while executing an oracle script: ORA-30036: unable to extend segment by 8 in undo tablespace 'UNDO' ORA...

-

Iterator mediator breaks a message from the given xpath pattern and produces smaller messages. If you need to collect an attribute value ...

-

In this scenario we will be monitoring requests and responses passed through a proxy service in WSO2 ESB. The proxy service is calling an in...